Server virtualization is a foundational IT strategy that fundamentally redefines infrastructure management. It involves running multiple, independent virtual servers on a single physical machine, unlocking massive cost savings, streamlining operations, and building a far more agile and resilient infrastructure. This isn't just a technical tweak; it's a strategic shift toward software-defined resource control.

How Server Virtualization Transforms IT Infrastructure

In a traditional data center, each application resides on a dedicated physical server. This model is notoriously inefficient, with most hardware idling at just 5-15% of its total capacity. This results in staggering waste in power, cooling, and capital expenditure.

Server virtualization shatters this inefficient model by introducing a thin software layer called a hypervisor, which allows one physical machine to host numerous virtual machines (VMs). Instead of a one-to-one relationship between hardware and software, you achieve a one-to-many architecture. Workloads are encapsulated into portable, software-defined units, no longer tied to a specific piece of hardware. This abstraction is the key to unlocking the core benefits of server virtualization:

- Maximized Resource Utilization: Consolidate multiple workloads onto fewer servers, driving hardware usage up and slashing waste.

- Reduced Capital and Operational Costs: A smaller hardware footprint directly translates to lower TCO through reduced spending on purchasing, power, cooling, and data center space.

- Enhanced Agility and Speed: Provision a new server in minutes, not days or weeks, dramatically accelerating development, testing, and deployment cycles.

- Improved Disaster Recovery: Encapsulating an entire server into a single file makes backup, replication, and restoration vastly simpler and faster, bolstering business continuity.

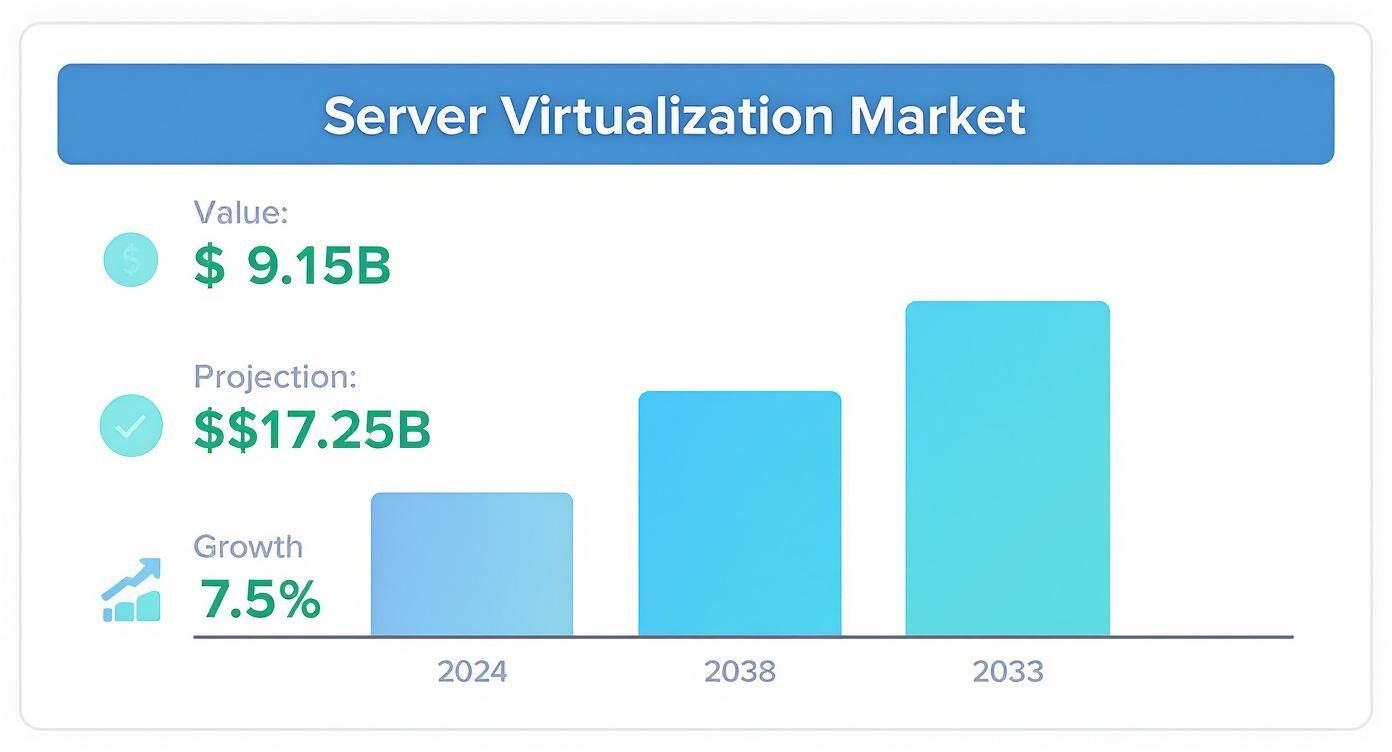

The real-world impact of this efficiency is undeniable. The global server virtualization market was valued at around USD 9.15 billion in 2024 and is on track to hit USD 17.25 billion by 2033. This growth reflects a clear industry-wide move toward more dynamic and cost-effective IT operations.

Leading hypervisors like the KVM-based Proxmox VE and VMware vSphere provide powerful platforms for managing virtual environments. If you're evaluating options, our detailed Proxmox VE vs VMware vSphere guide offers a technical breakdown. These principles also power the comprehensive cloud services that have fundamentally reshaped modern infrastructure provisioning and scalability.

Reduce Costs with Strategic Server Consolidation

The most immediate and compelling benefit of server virtualization is its significant impact on the IT budget. By decoupling applications from dedicated physical hardware, you can fundamentally rewrite your cost structure through server consolidation—running multiple virtual machines (VMs) on a single, powerful physical server.

This strategy immediately slashes Capital Expenditures (CapEx). Instead of procuring, racking, and cabling twenty individual servers for twenty workloads, you might only need a two-host high-availability cluster running a platform like Proxmox VE. This represents a massive reduction in the hardware you must purchase, maintain, and eventually replace.

The savings extend directly to Operational Expenditures (OpEx). Fewer physical machines mean a smaller data center footprint, which translates directly into lower bills for power, cooling, and real estate. Maintenance contracts are simplified, and your IT team spends significantly less time on physical hardware management.

This market data underscores a critical point: the projected growth to $17.25 billion by 2033 is driven by organizations leveraging virtualization as a core strategy for controlling costs and optimizing efficiency.

Maximizing Hardware and Budget Efficiency

The financial advantages of server consolidation are proven in enterprise and government deployments. We have observed government IT programs achieving consolidation ratios exceeding 10:1—replacing ten underutilized, power-hungry servers with a single efficient host. This level of consolidation yields substantial energy savings and reduces hardware refresh budgets.

A key mechanism for this is improved resource allocation optimization. In a physical model, an entire server might be dedicated to an application using only 10% of its CPU. Virtualization allows you to precisely allocate CPU, RAM, and storage resources to each VM, eliminating waste and ensuring maximum value from your hardware investment.

By pooling resources and allocating them dynamically, virtualization turns idle server capacity from a sunk cost into a flexible asset. This efficiency is the core mechanism that drives down the Total Cost of Ownership (TCO) for IT infrastructure.

A Practical Cost Comparison

Consider a typical scenario: a business running 20 aging physical servers, each consuming power, occupying rack space, and requiring individual maintenance. Now, envision migrating those 20 workloads onto a modern, 2-host Proxmox VE cluster. The table below provides a simplified cost analysis of this transition, highlighting the dramatic savings.

Cost Analysis of Traditional vs Virtualized Infrastructure

| Cost Factor | Traditional Environment (20 Physical Servers) | Virtualized Environment (2 Proxmox Hosts) |

|---|---|---|

| Initial Hardware (CapEx) | High cost for 20 mid-range servers. | Lower cost for 2 high-density servers. |

| Power & Cooling (OpEx) | Significant; each server draws power 24/7. | Drastically reduced; potentially 80-90% less power. |

| Data Center Space (OpEx) | Requires multiple racks. | Requires minimal rack space (e.g., 2U-4U). |

| Software Licensing (OpEx) | Potentially 20 OS licenses; app licenses vary. | Fewer OS licenses; potential for reduced app licensing. |

| Admin Overhead (OpEx) | High; requires managing 20 separate machines. | Low; manage all VMs from a single interface. |

| Maintenance (OpEx) | 20 individual hardware support contracts. | 2 hardware support contracts. |

The numbers clearly demonstrate the value proposition. A virtualized environment not only reduces initial hardware outlay but also generates continuous OpEx savings in power, space, and administrative effort. This strategic consolidation is why organizations realize a significant and rapid return on investment.

Gain Unmatched IT Agility and Scalability

Beyond cost savings, one of the most transformative benefits of server virtualization is the immense boost in operational agility and scalability. By breaking the rigid link between software and its underlying physical hardware, virtualization introduces a level of speed and flexibility that is impossible in a traditional physical environment.

Consider a common request: a development team needs a new server environment to test a critical application patch. In a physical world, this process is a bottleneck. An administrator must order hardware, wait for delivery, rack the server, install the OS, and configure the environment. The entire workflow can take days or weeks, stalling innovation.

With virtualization, this workflow shrinks from weeks to minutes. Instead of procuring a new physical machine, you simply clone an existing VM template. This action provisions an identical, ready-to-use server instance almost instantly, enabling your team to begin work immediately. This rapid provisioning is a cornerstone of modern DevOps and is crucial for accelerating service delivery.

Actionable Example: Cloning a VM in Proxmox VE

This step-by-step example demonstrates how quickly a KVM virtual machine can be cloned using the Proxmox VE command line—a task easily scripted for automation.

First, identify the VMID of the source VM and select a unique, unused VMID for the new clone.

Log into your Proxmox host via SSH. Command-line access is required to execute the

qmutility.Execute the

qm clonecommand. This utility creates an exact replica of a VM. The syntax is:qm clone <source_vmid> <new_vmid> --name <clone_name>.# Clone VM 100 to new VM 9001 with the name 'dev-server-clone-01' qm clone 100 9001 --name dev-server-clone-01100is the ID of our source VM template.9001is the unique ID assigned to our new clone.--name dev-server-clone-01provides a human-readable identifier.

Start the new VM. Once the clone is created, boot it with the

qm startcommand.qm start 9001

In three commands, a fully functional, isolated server environment is provisioned and running. This capability is foundational to building a responsive and agile IT infrastructure.

Scaling Resources On-Demand

This rapid provisioning capability extends to resource scaling. If a production application experiences a sudden traffic spike on a physical server, the only remediation is to schedule downtime for a physical hardware upgrade (e.g., installing more RAM).

Virtualization provides elasticity. You can dynamically add more CPU cores, RAM, or storage to a running VM, often without requiring a reboot or incurring significant downtime. This ability to scale resources up or down to meet real-time demand ensures consistent performance and a positive user experience.

The freedom to create, move, and scale virtual machines on the fly transforms infrastructure from a bottleneck into a strategic asset that adapts instantly to business needs. To explore this topic further, see our analysis of how virtualization empowers data centers.

Strengthen Disaster Recovery and Business Continuity

One of the most critical benefits of virtualization is its profound impact on disaster recovery (DR) and business continuity.

Recovering a failed physical server is a manual, time-consuming process: procure new hardware, reinstall the operating system, configure applications, and restore data from backups. This process is fraught with risk and lengthy downtime. Virtualization transforms this paradigm by encapsulating an entire server—OS, applications, data, and configuration—into a portable set of files.

This makes a virtual machine (VM) hardware-independent, allowing it to be restored and run on any available host in your environment. A recovery process that previously took hours or days can now be completed in minutes. This dramatically reduces your Recovery Time Objective (RTO)—the maximum acceptable downtime following a disaster—a metric critical to business survival.

Key DR Technologies Unlocked by Virtualization

Virtualization enables a suite of advanced technologies that build robust resilience into your infrastructure, transforming DR from a reactive process into a proactive, automated strategy. For a foundational understanding, review our guide on what is disaster recovery planning.

Three core technologies are particularly impactful:

- Live Migration: This feature allows a running VM to be moved from one physical host to another with zero downtime. It is essential for performing hardware maintenance or balancing workloads without service interruption.

- High Availability (HA) Clusters: In an HA cluster, hosts monitor each other. If a physical host fails, the VMs it was running are automatically restarted on healthy hosts within the cluster. This restores services in minutes, often without manual intervention.

- Snapshots: A snapshot captures the point-in-time state of a VM (disk and memory). Before performing a risky software update or configuration change, an admin can take a snapshot. If the change causes issues, the VM can be instantly reverted to its pre-change state, turning a potential disaster into a minor incident.

By bundling entire servers into portable, hardware-agnostic files, virtualization fundamentally de-risks IT operations. It transforms disaster recovery from a complex, hardware-centric problem into a manageable, software-defined process.

Slashing Your Recovery Time Objective

Combining these technologies results in a dramatic reduction in recovery time. In a physical environment, an RTO measured in days is not uncommon. With a properly configured virtual environment utilizing High Availability and replication, your RTO can be reduced to mere minutes.

This is not just a technical achievement; it provides a direct, measurable financial benefit. Industry studies show that downtime costs businesses thousands of dollars per minute. By minimizing service interruption, virtualization delivers a clear return on investment that extends far beyond hardware savings—it protects revenue and preserves brand reputation.

Improve Security Through Workload Isolation

Beyond cost and agility, a key benefit of server virtualization is its ability to create a fundamentally stronger security posture. In a traditional physical server environment, multiple applications and services often share a single operating system. A breach in one application can allow an attacker to move laterally across the server, compromising all other hosted services.

Virtualization enforces strict workload isolation. Each virtual machine (VM) operates in its own secure, sandboxed environment, completely isolated from other VMs on the same physical hardware. This creates digital blast doors between your applications.

This separation is a critical security control. If one VM is compromised—for instance, through a zero-day vulnerability in a web application—the damage is contained within that single virtual instance. The attacker is prevented from accessing other workloads running on the same host, providing powerful, built-in breach containment.

Granular Control with Micro-Segmentation

Modern virtualization platforms like Proxmox VE enable micro-segmentation through virtual switches and software-defined firewalls. This advanced security technique allows you to create granular security zones for individual workloads.

Instead of applying a single, broad firewall policy, you can define specific rules for each VM. For example, you can create a rule that allows your database server to communicate only with your application server on a specific port. To all other VMs and network segments, it is invisible. This "zero-trust" approach drastically shrinks the attack surface. An attacker who compromises a public-facing web server cannot use it to probe for vulnerabilities on a sensitive backend database if no network path exists between them.

A Powerful Defense Against Ransomware

Virtualization provides a potent weapon against ransomware. The ability to take immutable snapshots of VMs offers a recovery method that physical servers cannot match. A snapshot is an instantaneous, point-in-time copy of a VM, capturing its entire disk and memory state.

If a ransomware attack encrypts a VM's files, the standard recovery procedure is to discard the compromised machine and revert to a clean, pre-attack snapshot. This action can be completed in minutes, transforming a potentially catastrophic, multi-day disaster into a minor operational hiccup.

This process is significantly faster and more reliable than restoring from traditional file-level backups. The speed and simplicity of reverting from a snapshot is a crucial component of a modern cybersecurity strategy, directly mitigating the financial and reputational damage of an attack by enabling rapid recovery.

Simplify Server Management with Centralized Control

Virtualization dramatically simplifies day-to-day IT administration by consolidating the management of disparate physical servers into a single, cohesive interface. The days of managing dozens of individual machines, each with its own login and maintenance schedule, are over.

Platforms like Proxmox VE provide a web-based "single pane of glass" for your entire infrastructure. From this centralized dashboard, administrators can monitor performance, configure networks, allocate resources, and manage backups for every virtual machine (VM) and container. This centralized approach streamlines routine tasks and significantly reduces the potential for human error.

Unlocking Automation with APIs

This centralized control extends beyond a GUI to powerful automation capabilities via a rich Application Programming Interface (API). A well-documented API allows you to script and automate nearly any administrative task, which is the bedrock of an efficient, modern IT operation.

Instead of manually executing a multi-step process to provision a new server, an administrator can run a single script that completes the task in seconds. This enables the integration of your virtual infrastructure with CI/CD pipelines and DevOps workflows, which is essential for agile development and deployment.

By leveraging APIs, repetitive administrative tasks are transformed into automated, repeatable processes. This frees your technical team from routine maintenance, allowing them to focus on strategic initiatives that drive business value.

Actionable Example: Automating LXC Container Creation

The following Python script demonstrates how to use the proxmoxer library to connect to the Proxmox API and automatically create a new LXC container. This is a practical example of Infrastructure as Code (IaC). For a deeper dive, review our guide on Infrastructure as Code best practices.

from proxmoxer import ProxmoxAPI

# --- Proxmox API Connection Details ---

# Best practice: Use tokens and environment variables in production

proxmox_host = 'your-proxmox-host'

proxmox_user = 'root@pam'

proxmox_password = 'your-password'

proxmox_node = 'pve'

# --- New LXC Container Configuration ---

new_container_id = 205

new_hostname = 'automated-lxc-01'

storage_location = 'local-lvm'

template_name = 'local:vztmpl/ubuntu-22.04-standard_22.04-1_amd64.tar.gz'

# Connect to the Proxmox API (disable SSL verification for lab environments)

p_api = ProxmoxAPI(proxmox_host, user=proxmox_user, password=proxmox_password, verify_ssl=False)

# Define the new container's parameters

container_options = {

'ostemplate': template_name,

'storage': storage_location,

'hostname': new_hostname,

'password': 'a-strong-password', # Use a secrets manager in production

'net0': 'name=eth0,bridge=vmbr0,ip=dhcp',

'cores': 1,

'memory': 512,

}

# Create the new LXC container

p_api.nodes(proxmox_node).lxc.create(vmid=new_container_id, **container_options)

print(f"Successfully created LXC container {new_container_id} with hostname {new_hostname}.")

This script automates a process that would otherwise require several manual steps, demonstrating how centralized control and API access combine to create a more efficient and scalable IT environment.

Answering Common Server Virtualization Questions

As organizations adopt server virtualization, several key technical questions arise. Addressing these clarifies the technology and helps ensure a smooth transition.

What Is The Difference Between KVM And LXC?

This is a critical distinction, especially on platforms like Proxmox VE that support both. While both technologies enable workload consolidation, their underlying architectures are fundamentally different.

KVM (Kernel-based Virtual Machine): KVM is a Type-1 hypervisor that provides full hardware virtualization. It creates a complete virtual machine with its own virtualized hardware (CPU, RAM, NIC) and an independent kernel. This allows you to run any operating system, including Windows Server, BSD, or any Linux distribution. KVM offers the highest level of isolation and compatibility.

LXC (Linux Containers): LXC is a form of OS-level virtualization, or containerization. Containers share the host server's Linux kernel. This lightweight architecture makes them incredibly fast, resource-efficient, and ideal for microservices. They spin up almost instantly and have minimal overhead. The trade-off is that they can only run Linux-based applications.

Does Virtualization Negatively Impact Performance?

This is a common concern. While adding a hypervisor layer does introduce a small amount of overhead, modern hypervisors like KVM are highly efficient. The performance impact is negligible for the vast majority of workloads and is often undetectable.

When a virtual environment performs poorly, the root cause is almost always misconfiguration, not the virtualization technology itself. Common issues include overprovisioning CPU or RAM (CPU ready time), storage bottlenecks (high I/O wait), or improperly configured virtual networks. Proper capacity planning and performance monitoring are key.

How Difficult Is A Physical To Virtual Migration?

The process of migrating a physical server into a virtual machine, known as P2V (Physical-to-Virtual), is a mature and well-defined procedure with robust tooling available.

Tools like dd for disk imaging or third-party converters can create a digital replica of a physical server's hard drive. This disk image is then attached to a new VM. While the technical steps are straightforward, the success of a P2V migration depends on careful planning. Key considerations include accounting for hardware driver differences, planning network reconfigurations, ensuring storage performance meets workload demands, and scheduling an appropriate maintenance window to minimize downtime.

The migration is a one-time effort that unlocks the long-term benefits of simplified management, improved security, and robust disaster recovery capabilities.

Ready to unlock the cost savings and agility of virtualization? ARPHost, LLC provides high-performance bare metal servers and flexible KVM virtual private servers, ideal for building a powerful Proxmox environment. Explore our managed solutions and let our experts help you design a scalable infrastructure at https://arphost.com.

[…] procurement. You are essentially building your own secure, high-performance cloud on-premises. The benefits of server virtualization are substantial, enabling greater agility while maintaining complete control over your […]