In the private cloud vs public cloud debate, the core trade-off is between granular control and on-demand convenience. A private cloud provides dedicated, single-tenant infrastructure, offering unparalleled authority over security, performance, and compliance. In contrast, a public cloud operates on shared, multi-tenant resources, engineered for rapid scalability and operational agility. For IT professionals, understanding the architectural and operational differences is crucial for making a sound infrastructure decision.

Understanding Core Cloud Differences

Selecting the right cloud model is a foundational architectural decision that directly impacts cost, security posture, scalability, and operational overhead. The distinction extends far beyond server location; it dictates ownership, management responsibilities, and accountability for every layer of the technology stack, from bare metal hardware to the hypervisor.

A private cloud functions as a dedicated data center, whether deployed on-premises or hosted by a managed service provider. It guarantees exclusive access to resources—servers, storage, and networking hardware are provisioned for a single organization. This single-tenant architecture is why enterprises with stringent data sovereignty requirements or performance-sensitive workloads gravitate toward this model. It provides complete control, enabling deep customization of everything from network security policies on Juniper devices to performance tuning within a Proxmox VE cluster. The decision between different infrastructure models often involves weighing the pros and cons of various cloud and on-premise IT support solutions.

Conversely, a public cloud operates on a massive, shared infrastructure owned and managed by hyperscalers like AWS, Azure, or Google Cloud. In this multi-tenant environment, resources are consumed as a service on a pay-as-you-go basis. The agility is immense; virtual servers, containers, or services can be provisioned in minutes without any physical hardware interaction. However, this model operates on a shared responsibility paradigm. The provider secures the underlying infrastructure, but the customer is responsible for securing their data, applications, and configurations within that environment.

At-a-Glance Comparison Private vs Public Cloud

To clarify the architectural trade-offs, this table outlines the fundamental differences between private and public cloud models, focusing on technical and operational attributes relevant to IT professionals.

| Attribute | Private Cloud | Public Cloud |

|---|---|---|

| Tenancy | Single-tenant (dedicated resources) | Multi-tenant (shared resources) |

| Control | Full control over hardware and software stack | Limited to provider's service offerings and APIs |

| Cost Model | Capital Expenditure (CapEx) & predictable OpEx | Purely Operational Expenditure (OpEx), variable |

| Scalability | Limited by physical hardware capacity; planned scaling | Virtually unlimited elasticity, on-demand scaling |

| Security | Full control, physical and logical isolation | Shared responsibility model |

| Management | In-house team or managed service provider | Managed by the cloud provider |

| Best For | Stable workloads, compliance, high-performance computing | Variable workloads, rapid development, burst capacity |

Ultimately, choosing between a private and public cloud is a strategic decision that must align with technical requirements and business objectives. For organizations that need deep control over their environment—especially when leveraging powerful open-source virtualization platforms like Proxmox VE—a private cloud architecture offers significant advantages.

A Deep Dive on Security and Compliance

When evaluating private cloud vs. public cloud, the discussion invariably centers on security and compliance. A common misconception is that "private is inherently more secure." The reality is more nuanced; the primary differentiator is the control model, not an intrinsic security advantage.

A private cloud grants an organization complete authority over its entire infrastructure stack. A public cloud, by contrast, operates on a shared responsibility model where security duties are divided between the provider and the customer.

Private Cloud: Security Through Isolation and Granular Control

The cornerstone of private cloud security is physical and logical isolation. Because the hardware is dedicated to a single tenant, the attack surface associated with multi-tenancy is eliminated. There are no "noisy neighbors" consuming resources, and the risk of hypervisor-level side-channel attacks from other tenants is nullified.

This absolute control over data sovereignty ensures that sensitive information remains within defined physical and logical boundaries, a critical requirement for regulated industries. This demand for control is a major driver of market growth. The global private cloud services market, valued at approximately USD 124.6 billion in 2025, is projected to reach USD 618.3 billion by 2035. This compound annual growth rate of nearly 16% is largely fueled by data security concerns and stringent regulatory frameworks. For more details, consult the private cloud services market report.

Granular Control Down to the Command Line

In a private cloud, security policies can be implemented with surgical precision. Using enterprise-grade networking equipment from vendors like Juniper, engineers can configure complex firewall rules, establish secure VLANs, and implement micro-segmentation to isolate workloads in development, staging, and production environments.

This level of control extends to identity and access management (IAM). On a platform like Proxmox VE, the principle of least privilege can be strictly enforced. To meet HIPAA compliance, for instance, a unique user role can be created with permissions restricted solely to managing a single virtual machine containing patient data, preventing access to the underlying storage or network configurations.

Here is a practical CLI example of implementing this in Proxmox VE:

# Step 1: Create a new role with minimal permissions (VM power management and console access)

pveum roleadd HIPAA-VMAccess -privs "VM.PowerMgmt VM.Console"

# Step 2: Create a user and assign them to a dedicated group for accountability

pveum useradd hipaa_user@pve -group hipaa_team

# Step 3: Apply the restrictive role to the group for a specific VM (ID 101)

pveum aclmod /vms/101 -group hipaa_team -role HIPAA-VMAccess

This demonstrates how granular access control can be defined and audited at the infrastructure level—a fundamental requirement for many compliance frameworks.

The Public Cloud's Shared Responsibility Model

Public cloud providers like AWS, Azure, and GCP operate on a shared responsibility model. The provider is responsible for the "security of the cloud" (physical data centers, hardware, core network), while the customer is responsible for "security in the cloud."

This places the burden on the customer to manage:

- Data Security: Implementing encryption at rest and in transit.

- Identity and Access Management (IAM): Correctly configuring user roles, permissions, and policies.

- Network Security: Configuring security groups, network ACLs, and virtual private clouds (VPCs).

- Application and OS Security: Hardening virtual machine images, patching operating systems, and securing application code.

The primary security challenge in the public cloud is not the absence of tools but the complexity of their configuration. A single misconfiguration in an S3 bucket policy or a security group rule can lead to catastrophic data exposure.

While public cloud providers offer a vast array of security services, effective implementation requires deep expertise. The model relies on trusting the provider's logical isolation mechanisms in a multi-tenant environment. These systems are highly robust but present a different risk profile compared to a dedicated private cloud. This underscores the importance of a robust data protection strategy, such as implementing immutable backup solutions to safeguard critical data regardless of its location.

Comparing Performance and Scalability Models

When analyzing private cloud vs public cloud, performance and scalability represent a trade-off between predictable, dedicated power and elastic, on-demand agility. From an engineering standpoint, this distinction dictates how resources are provisioned, managed, and optimized for specific workloads.

A private cloud, built on dedicated bare metal hardware, delivers performance predictability. IT teams have direct control over the entire infrastructure stack—CPUs, storage arrays, network switches—allowing for meticulous tuning to meet the demands of specific applications. This is critical for workloads where consistent low-latency performance is a strict requirement, such as high-performance computing (HPC), large-scale databases, or real-time financial trading platforms. The ability to eliminate resource contention and guarantee a specific level of I/O operations per second (IOPS) is a key advantage.

Fine-Tuning Private Cloud Performance

In a private cloud architecture, performance is an engineering discipline.

- Storage Optimization: Deploy a distributed storage system like Ceph to create distinct performance tiers. High-transaction database logs can be placed on NVMe SSDs for maximum throughput, while archival data resides on cost-effective HDDs.

- Network Segmentation: With dedicated networking hardware from vendors like Juniper, you can create isolated, high-throughput networks for storage traffic (e.g., Ceph replication) or VM live migrations, ensuring these background processes never impact application performance.

- CPU Pinning: For latency-sensitive applications, a virtual machine's vCPUs can be pinned to specific physical CPU cores. This instructs the hypervisor scheduler to dedicate those cores exclusively, eliminating context-switching overhead and ensuring consistent processing power.

A significant advantage of a private cloud is the elimination of the "noisy neighbor" problem. As the sole tenant, you are insulated from resource contention for CPU cycles, network bandwidth, or storage IOPS—an unpredictable variable inherent to multi-tenant public cloud environments.

Scaling a Proxmox Private Cloud

Scaling a private cloud, such as a Proxmox VE cluster, is a deliberate, architectural process that offers controlled, predictable growth. It typically involves adding new bare metal nodes to the cluster, which requires capacity planning, hardware procurement, and integration.

Best practices for scaling a Proxmox cluster include:

- Standardize Hardware: Use identical or similar hardware for all cluster nodes to ensure predictable performance and simplify management. Mismatched nodes can cause resource imbalances and complicate VM migrations.

- Maintain Capacity Headroom: A buffer of 20-30% spare capacity is recommended. This allows the cluster to absorb unexpected node failures via High-Availability (HA) and accommodate gradual workload growth without emergency hardware procurement.

- Implement High-Availability (HA): Configure Proxmox HA groups for critical VMs. This feature automates the process of restarting VMs on other nodes if a host fails, ensuring service continuity during outages or planned maintenance.

Public Cloud Elasticity and On-Demand Scale

The public cloud is architected for near-limitless, on-demand scalability, making it ideal for workloads with unpredictable traffic patterns. Instead of provisioning hardware for peak capacity, resources are consumed as needed, with elasticity managed by automated services.

Services like AWS Auto Scaling Groups or Azure Virtual Machine Scale Sets exemplify this model. They automatically add or remove virtual machine instances based on predefined metrics like CPU utilization or network I/O. When a marketing campaign drives a traffic surge, the system scales out horizontally within minutes. As traffic subsides, it scales back in to optimize costs.

Serverless computing platforms like AWS Lambda or Azure Functions extend this concept further by abstracting away all underlying infrastructure. Code is executed in response to events, and the provider manages all provisioning and scaling. This model is highly efficient for stateless, intermittent tasks, as billing is often calculated in milliseconds of compute time. This dynamic elasticity remains a core differentiator in the private cloud vs public cloud discussion, particularly for businesses with highly variable resource demands.

A Practical Cost Analysis: TCO vs. OpEx

The financial comparison between private cloud vs. public cloud centers on two distinct models: upfront investment versus pay-as-you-go consumption. This is the classic trade-off between Capital Expenditure (CapEx) and Operational Expenditure (OpEx), and the chosen model profoundly impacts IT budgeting and financial planning.

A private cloud is a CapEx-intensive model. It requires an initial investment in hardware—servers, storage, and networking equipment. Beyond the initial purchase, the long-term cost is evaluated using a Total Cost of Ownership (TCO) analysis. The public cloud is a pure OpEx model, eliminating upfront hardware costs in favor of a recurring monthly bill based on resource consumption.

Calculating Private Cloud TCO

A comprehensive TCO analysis for a private cloud must account for all direct and indirect costs over the asset's lifecycle, typically 3-5 years.

A realistic TCO breakdown includes:

- Server Hardware: Physical servers with specified CPU, RAM, and storage configurations.

- Networking Gear: Switches, routers, and firewalls from vendors like Juniper to provide secure connectivity.

- Software Licensing: Costs for the virtualization platform (though Proxmox VE is open-source), operating systems, and management tools.

- Infrastructure Costs: Recurring expenses for power, cooling, and rack space in a colocation facility.

- IT Labor: The salaries of skilled engineers required to design, deploy, manage, and maintain the infrastructure. This is often the largest ongoing operational cost.

Here is a simplified 3-year TCO model for a three-node Proxmox VE high-availability cluster:

| Cost Component | Year 1 (CapEx + OpEx) | Year 2 (OpEx) | Year 3 (OpEx) | 3-Year TCO |

|---|---|---|---|---|

| Server Hardware (3 Hosts) | $24,000 | $0 | $0 | $24,000 |

| Networking Hardware | $5,000 | $0 | $0 | $5,000 |

| Power & Colocation | $7,200 | $7,200 | $7,200 | $21,600 |

| IT Management (Labor) | $45,000 | $45,000 | $45,000 | $135,000 |

| Annual Total | $81,200 | $52,200 | $52,200 | $185,600 |

After the initial CapEx investment, annual costs stabilize into a predictable operational expense, primarily driven by labor and data center fees.

The Public Cloud OpEx Model

The public cloud operates on a pure OpEx model, making it attractive for startups and businesses seeking to preserve capital. However, its primary challenge is cost predictability.

A typical monthly public cloud bill includes charges for:

- Compute Instances (VMs): Billed by the second or hour, based on instance type and size.

- Block Storage (EBS/Managed Disks): Billed per gigabyte-month.

- Data Egress: A significant and often overlooked cost for transferring data out of the cloud provider's network.

- Managed Services: Fees for databases, load balancers, monitoring tools, and other platform services.

- Support Plan: A recurring fee for access to technical support.

The greatest financial challenge of the public cloud is cost volatility. Unforeseen traffic spikes, misconfigured auto-scaling policies, or large data transfers can cause significant, unexpected increases in monthly expenses, complicating budget forecasting.

While both models are growing, public cloud spending is expanding rapidly, with projections suggesting it could reach $723.4 billion in 2025. This growth is driven by businesses of all sizes, with 54% of small and mid-sized businesses expected to spend over $1.2 million annually on cloud services. More trends are available in these cloud computing statistics on Brightlio.com.

The Tipping Point for Cost-Effectiveness

The most cost-effective solution depends entirely on the workload profile. For stable, predictable workloads that operate 24/7, a private cloud often achieves a lower TCO over a 3-5 year horizon. Once the initial hardware investment is amortized, the fixed operational costs are typically lower than the consumption-based billing of a public cloud.

The "tipping point" occurs when a consistent monthly public cloud bill exceeds the amortized TCO of a comparable private cloud. At this stage, investing in dedicated hardware becomes the more financially prudent decision.

Matching Workloads to the Right Cloud Environment

The private cloud vs public cloud decision should be driven by workload characteristics. Aligning applications with the appropriate infrastructure based on their performance, security, compliance, and cost profiles is essential for operational efficiency and financial sustainability.

The public cloud dominates the market in terms of spending. Global investment in public cloud infrastructure surged by over $20 billion (25%) between Q2 2024 and Q2 2025, reaching $99 billion for the quarter. The market is led by Amazon Web Services (AWS) with a 30% share, followed by Microsoft Azure and Google Cloud. A detailed analysis can be found in this report on Q2 2025 cloud market share from CRN.com.

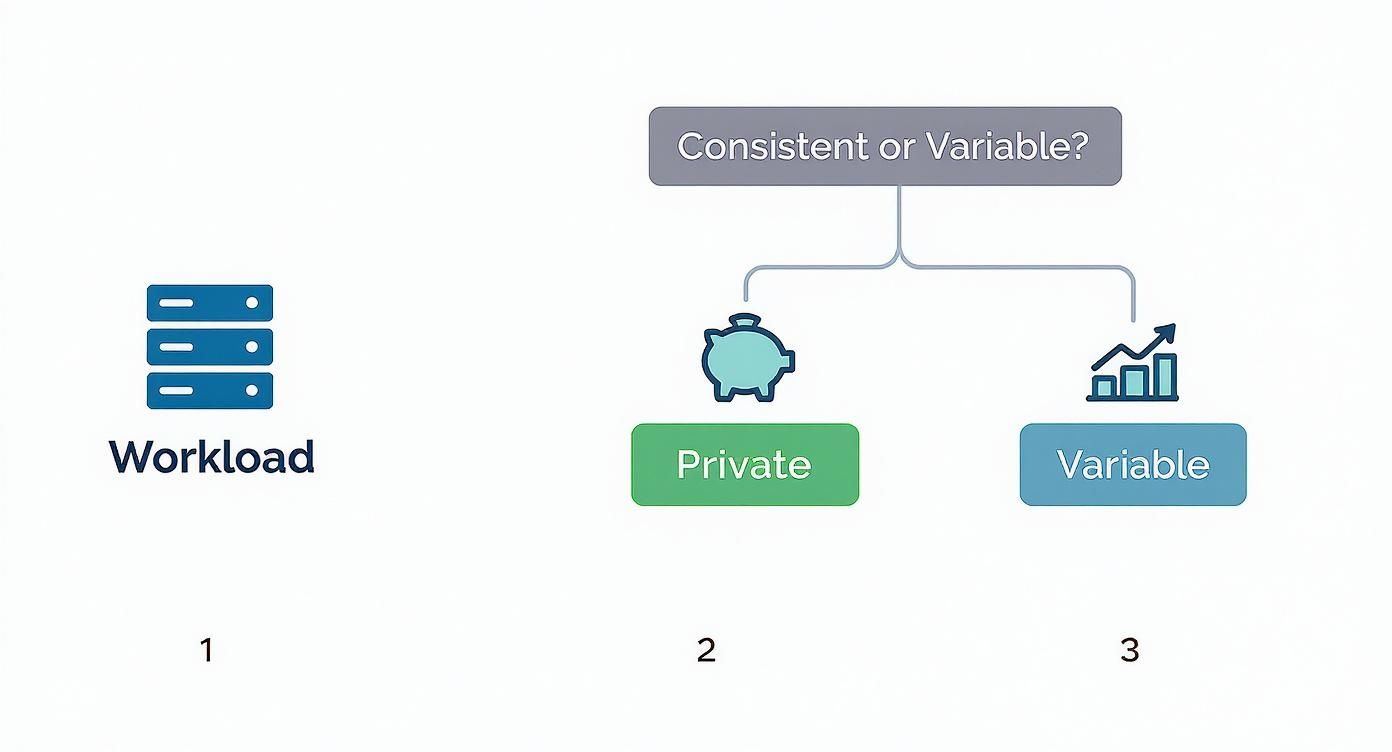

This infographic illustrates a decision-making framework for workload placement, contrasting the predictable TCO of a private cloud with the pay-as-you-go flexibility of a public one.

As shown, workloads with stable resource requirements are well-suited for a private cloud's TCO model, while variable workloads benefit from the public cloud's OpEx structure.

Ideal Workloads for a Private Cloud

A private cloud excels where control, predictable performance, and stringent data governance are paramount. Its customizable nature makes it the superior choice for specific, high-value applications.

Legacy Applications: Many mission-critical applications were not designed for cloud-native environments and have rigid dependencies on specific hardware, operating systems, or network configurations. A private cloud built on a flexible platform like Proxmox VE allows you to create a bespoke environment that meets these legacy requirements.

Strict Compliance and Data Sovereignty: For workloads governed by regulations like GDPR, HIPAA, or PCI DSS, a private cloud provides auditable control over data residency. You can physically and logically enforce where data is stored and processed and implement granular security policies—such as specific firewall rules on Juniper devices—to satisfy compliance auditors.

Latency-Sensitive Platforms: High-frequency trading, real-time analytics, and industrial IoT systems demand ultra-low latency and deterministic performance. In a private cloud, compute and storage can be co-located on high-performance bare metal, and techniques like CPU pinning can be used to eliminate hypervisor-induced jitter, ensuring consistent, microsecond-level response times.

The key technical advantage is deterministic performance. By engineering the entire stack from bare metal up, you eliminate the performance variability inherent in a multi-tenant environment—a critical factor for applications where every millisecond is vital.

Prime Use Cases for the Public Cloud

The public cloud's strengths lie in its massive scale, elasticity, and pay-as-you-go model, making it ideal for dynamic, experimental, or resource-intensive workloads.

Scalable Web Applications and E-commerce: For applications with unpredictable traffic, the public cloud's elasticity is unparalleled. Auto-scaling groups can provision or de-provision servers in minutes to match real-time demand, ensuring a consistent user experience during traffic spikes without the cost of maintaining idle peak-capacity hardware.

Big Data Analytics and AI/ML Training: Training complex machine learning models or processing large datasets may require thousands of CPU or GPU cores for a short duration. The public cloud allows organizations to rent this capacity on-demand, paying only for the compute time used and avoiding the massive capital expenditure for specialized hardware that would otherwise be underutilized.

Cost-Effective Disaster Recovery (DR): Maintaining a fully redundant secondary data center is cost-prohibitive for many businesses. The public cloud offers a viable DR solution. Critical data and applications can be replicated to a provider's region, with compute resources provisioned only during a failover event, making robust DR strategies financially accessible.

Choosing Your Strategy: The Hybrid Cloud Solution

The private cloud vs. public cloud discussion often incorrectly frames it as a binary choice. For most modern enterprises, the optimal strategy is a hybrid cloud model that leverages the distinct advantages of both. This approach involves placing workloads in the environment where they are best suited from a technical, financial, and compliance perspective.

By viewing private and public clouds as complementary tools, organizations can run stable, predictable applications on private infrastructure to optimize costs and control, while tapping into the public cloud for its massive scale, specialized services, and on-demand capacity.

A Framework For Hybrid Deployment

A successful hybrid cloud requires a deliberate architectural strategy. Workloads must be matched to the appropriate environment to prevent performance bottlenecks, security gaps, and unforeseen costs.

A practical deployment framework includes:

- Core, Predictable Workloads: Mission-critical systems such as ERP platforms, internal databases, and legacy applications belong on a private cloud, where performance can be guaranteed and the long-term TCO is favorable.

- Burst Capacity and Variable Workloads: Use "cloud bursting" to handle demand spikes. An e-commerce application running on a private cloud can automatically offload excess traffic to a public provider during a sales event, maintaining performance without over-provisioning private resources.

- Disaster Recovery (DR): The public cloud serves as an ideal and cost-effective DR target. Critical VMs from a private Proxmox VE cluster can be replicated to a public cloud region, with compute resources remaining dormant until a disaster is declared.

- Specialized Services: Leverage public cloud platforms for services that are impractical to build in-house, such as large-scale AI/ML model training, global content delivery networks (CDNs), or big data analytics platforms.

A hybrid model provides the best of both worlds: complete control over sensitive data and mission-critical applications on a private cloud, combined with access to the innovation and scale of the public cloud.

Managed Services In Hybrid Environments

Architecting and managing a hybrid environment is complex. It involves establishing secure networking between disparate platforms, unifying security policies, and implementing comprehensive monitoring. A managed service provider can be instrumental in this process. As you evaluate public cloud components, a guide on how to choose your cloud provider can help assess the technical merits of AWS, Azure, and GCP.

An expert partner can design and implement the entire solution, establishing secure connectivity (e.g., VPN or Direct Connect) between a private Proxmox cluster and a public cloud. They can also deploy integrated monitoring and security tools to provide a "single pane of glass" view, ensuring operational stability, proactive threat detection, and cost optimization across the hybrid landscape.

Common Questions, Answered

When evaluating private vs. public cloud, several key technical questions consistently arise. Here are concise, practical answers.

Is a Private Cloud Always More Secure?

No. A private cloud provides the potential for higher security through complete control and physical isolation, but it is not inherently more secure. Its security is entirely dependent on the expertise of the team managing it.

A poorly configured private cloud can be significantly more vulnerable than a well-architected public cloud environment managed by providers that invest billions in security infrastructure and personnel. The key difference is the security model: full control and responsibility in a private cloud versus a shared responsibility model in a public one.

Can I Migrate from Public to Private Cloud?

Yes, this process, known as repatriation, is feasible but complex. Migrating workloads from a public cloud to private infrastructure requires meticulous planning for data transfer, application compatibility analysis, and downtime management.

Workloads built on proprietary, cloud-native services (e.g., AWS Lambda, Azure Functions, Google BigQuery) are particularly challenging to repatriate. They often require significant re-architecting to run on a private virtualization platform like Proxmox VE.

When Is a Private Cloud More Cost-Effective?

A private cloud generally becomes more cost-effective for stable, predictable workloads with a long-term operational horizon of 3-5 years. After the initial capital expenditure (CapEx) for hardware is amortized, the predictable ongoing operational costs are often lower than the variable, consumption-based billing of a public cloud.

This is especially true for workloads with high data egress requirements or sustained high resource utilization, where public cloud costs can escalate rapidly.

At ARPHost, LLC, we specialize in designing, deploying, and managing high-performance private clouds built on Proxmox VE. Our experts provide end-to-end services, from workload analysis and TCO modeling to building secure, scalable infrastructure that gives you complete control over your IT environment.

Learn more about our managed Proxmox private clouds and how they can optimize your IT strategy.

[…] there's a definite distinction between the two, you can learn more about the nuances of a private cloud vs public cloud in our detailed […]

[…] crucial first step in this journey is understanding the distinctions between private and public clouds, as each offers different levels of control, cost, and security. The Canadian healthcare […]